- Ingest - nothing special - just store VC-6 , just like any other media asset.

- Define a metadata document identifying the properties of each available

resolution in the stored content

(e.g.lo= low resolution asset at 240 x 135 pixel Rec.709 SDR). - Define a simple API adapter to convert simple fetching operations in

existing tools (e.g. AI segmentation) to meet

Time Addressable Media

principles. Ideally, this should work with tools like

aws:rekognitionorTHEOplayerorshaka-playerorvideo.js) - BASIC - high volume AI pass

- choose the lowest practical resolution and scan every asset for audio-visual-engagement metrics

- use a human assisted AI decision tree to filter the list to a reasonable number of feeds for editorial review // 2nd pass metrics generation

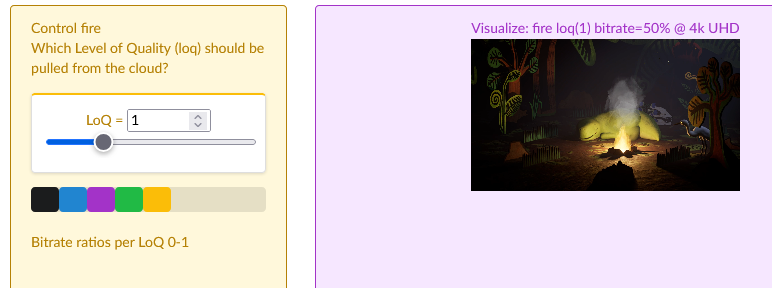

- MID - full scan of candidate feeds / clips

- increase the resolution & re-scan every asset with higher fidelity settings to retrieve better metrics / transcripts / Quality data on each clip.

- FULL - clips selected for air

- Extra work for compliance / editorial / graphics passes on highest required resolution.

The important part of this PoC is that no proxies are generated. The content exists as a single asset that’s programmatically retrieved from generic storage that has been optimised for the user’s workflow needs.